AI in Healthcare: Addressing Data Privacy Concerns - Tech News

AI in Healthcare: Addressing Data Privacy Concerns - Tech News

Recent discussions have brought to light significant controversies surrounding AI projects, particularly in the medical field. One such project, "Medisafe" (藥倍安心)developed by a high school student, has garnered multiple awards, including recognition at the Geneva International Exhibition of Inventions. Medisafe is an AI-powered web application designed to enhance medication safety by cross-referencing medical prescriptions with patient data. It aims to detect potential drug prescription errors by analyzing a patient's allergy history, long-term medication records, and clinical conditions like liver and kidney function, automatically verifying doctors' prescriptions.

However, the project has sparked debate regarding potential patient data leakage. Is this concern valid? And if so, what measures can developers take to ensure privacy? This blog post will explore:

- The validity of data leakage concerns regarding Medisafe.

- Industry standards for medical data privacy.

- How developers can prevent data breaches.

This information will be particularly relevant to developers building privacy-sensitive applications and anyone interested in large language model (LLM) development.

Data Leakage Concerns with Medisafe

Reports indicate that Medisafe's development involved Pakistani developers, and its backend is powered by the Microsoft Azure OpenAI Platform from the owner presentation. Potential data leakage points could therefore include:

- The backend managed by Pakistani developers: Currently, there is no publicly available privacy policy for this component, making data privacy a significant concern right here.

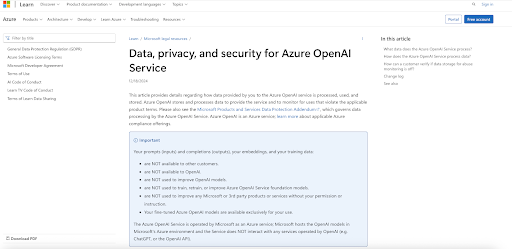

- Microsoft Azure OpenAI Platform: Microsoft's legal resources explicitly state their commitment to data privacy on the Azure OpenAI Platform. There is no collection and access to those data.

It's crucial to differentiate between directly using OpenAI's public ChatGPT service and leveraging it through a cloud provider like Microsoft Azure, which often provides additional privacy guarantees. Where the OpenAI Privacy has explicitly stated the data collect as follows.

Industry Standards for Medical Data: HIPAA

When dealing with medical data, the Health Insurance Portability and Accountability Act (HIPAA) is the gold standard for patient data protection in the United States. Key aspects of HIPAA compliance include:

- Protecting Patient Data Privacy and Limiting Access: Strict access controls and role-based permissions are essential. Software must adhere to the "minimum necessary" standard, ensuring users only access the Protected Health Information (PHI) required for their specific job functions.

- Securing PHI with Technical Safeguards: PHI must be encrypted both at rest and in transit using robust protocols (e.g., TLS/SSL). Strong user authentication (like multi-factor authentication), comprehensive audit trails, and regular risk assessments are vital to prevent unauthorized access or breaches.

- Enabling Patient Rights and Maintaining Compliance: Systems should allow patients to access, inspect, and obtain copies of their health data while safeguarding privacy. Applications must support HIPAA rules by providing audit logs, facilitating consent management, and enabling secure third-party data sharing only under appropriate Business Associate Agreements (BAAs).

Preventing Data Leaks: Best Practices for Developers

When developing applications that handle sensitive data, especially medical information, developers must exercise extreme caution:

- Avoid Free Services and Direct APIs (Unless Specified): Many free AI services and direct API offerings (from providers like Gemini, ChatGPT, and Claude) may collect and use your data to improve their AI models.

- Prioritize Cloud Providers with Strong Privacy Guarantees: Opt for services from reputable cloud providers that explicitly state their data handling and privacy policies, such as:

- Microsoft Azure OpenAI Platform: Offers specific data privacy assurances.

- Amazon Bedrock: Provides similar guarantees.

- Google Cloud: While Google Cloud generally offers HIPAA compliance, it's crucial to verify the data usage policies for each specific generative AI service and deployment, as not all generative AI data is excluded from model improvement by default. Refer to Google's official documentation for detailed guidance on achieving HIPAA compliance.

Ethical Responsibility and Due Diligence

Encountering any application with privacy concerns, especially in healthcare, necessitates a thorough understanding of regulations like HIPAA and a strong commitment to ethical responsibilities. Never take data privacy lightly.

How about ITDOGTICS?

As the author of ITDOGTICS, I am acutely aware of privacy considerations. Most of our applications directly leverage the Google Gemini API without our own backend. Therefore, by using ITDOGTICS services, you are agreeing to Google's Privacy Policy and Terms of Service regarding data handling for their AI services and backend.

Comments

Post a Comment