Build AI Code Generation Tools For Large Scale Project in Python? Part 1 - Development Diary and Discussion

Building AI Code Generation Tools from Scratch in Python 3: A Journey from Zero to Everything (Part 1)

Can we create AI code generation tools in Python 3 from the ground up? This is an open-ended question, and I don't claim to have all the answers. I welcome discussion and idea exchange, as I'm always open to learning. This exploration also reveals how I built the ITDOGTICS application for first prototype in just three days.

While I believe there are limitations to current AI technology, I'll address those in a future blog post where I'll delve into how AI works and why it has become so prominent. If you're familiar with basic AI concepts, feel free to skip the introduction to Scope of this Blog Post.

This blog post assumes you have a basic understanding of Python 3. If you don't, it might be challenging to follow along.

Disclaimer:

I won't provide the complete project code, as I encourage discussion and collaboration.

- For beginners: Use this as a reference to build your own project and join the conversation. I'm happy to help with any learning challenges.

- For experienced developers: Please share your insights and discuss better approaches.

Objective:

- We know what we want to build, but we prefer a template to starting from scratch.

- Automate code writing for a large project (multiple files, not just a single function) using Python 3.

- Ideally, eliminate the need to pay for AI code generation tools.

Understanding AI Code Generation:

AI code generation involves three key components:

- Prompt: The input to the Large Language Model (LLM).

- LLM: Models like ChatGPT, Gemini, and Claude.

- Code Generation Tools: Tools like Co-pilot, Windsurf, and Cursor, which use LLMs to automate code output.

Building an LLM is expensive, requiring vast amounts of data and processing power. For this project, I'll use Claude (I lack the resources to train a new LLM). Therefore, we'll focus on the Prompt and how to leverage an existing LLM.

Observations:

Most AI code generation tools share these features:

- Codebase analysis.

- Code generation.

Scope of this Blog Post:

- We define the requirements for what we want to build.

- The tool generates a project (multiple files, readable and maintainable code).

- Developers can then refine the generated code (further automation will be discussed in a later post).

Implementation Details:

Required Libraries:

anthropic(for the Claude API)os(for automation)subprocess(for automation)shutil(for file handling)re(regular expressions)

Step 1: Defining the Requirements:

What information does the AI need to build and run the project?

- System overview

- Framework, tools, and libraries

- Features

- File structure

- Implementation details

Instead of writing all this manually, why not use AI to generate it? You can use your existing knowledge to create an initial prompt. An example, used for my expense tracker, can be found here:

Step 2: Building the Code:

This simulates a software engineer's daily workflow, which can be broken down into five procedures:

- Determine workflow

- Project initialization and dependency installation

- Code file generation

- Compilation

- Execution

We'll need basic functions to automate file creation, setup, and other commands:

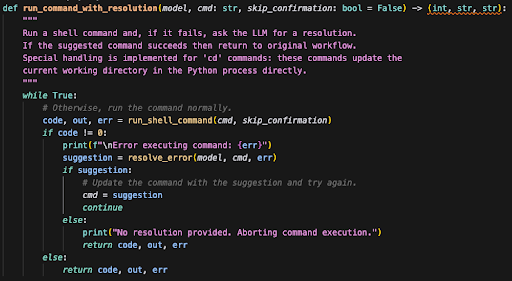

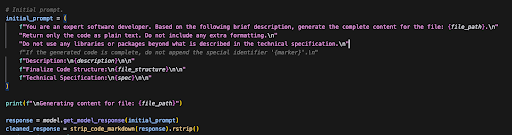

run_shell_command: Controls the OS for automation.run_command_with_resolution: Handles potential errors fromrun_shell_command. A non-zero return code indicates an error, which needs resolution.resolve_error: Sends the error to the LLM for suggested solutions.strip_code_markdown: Extracts code from markdown.

Determining the Workflow (Step 0):

This crucial step drives the entire project and generates the commands needed later. The workflow is defined in JSON format for easy parsing by the program.

run_command_with_resolution function for automation.For large projects, code generation can be divided into two processes:

- Obtain the file structure and file descriptions.

- Generate the code for each file.

Let the LLM determine the file structure, including descriptions to inform later code generation.

For each file:

- Create the directory.

- Create the file.

- Generate the content.

This finally creates the initial project structure.

Challenges:

- Token exhaustion: Large files can exceed token limits.

- Code skipping: The LLM might skip parts of the implementation.

- Inconsistent code generation: Function naming inconsistencies between files.

Initial Thoughts on Overcoming Challenges:

- Chunking: Break down files and use a diff-like approach.

- Code skipping: Refine the prompt.

- Contextual awareness: Include relevant code from other files in the prompt.

Next Steps:

In the next blog post, I'll share my testing results on overcoming compilation errors (at least making the project runnable).

Comments

Post a Comment