The AI Boom: Why Is Everyone Talking About It? - Tech Explanation

The AI Boom: Why Is Everyone Talking About It?

AI is everywhere! ChatGPT made AI a household name, and companies like Nvidia are seeing huge success.

What Are All These AI Tools?

So many AI tools are popping up, it can be confusing! Here's a simple breakdown:

- AI Models: These are the brains of the operation. Think of them like the software that powers everything. Examples include ChatGPT, OpenAI's models, Anthropic's Claude, Google's Gemini, and DeepSeek.

- AI Tools: These use AI models to do something. They're like apps that use the AI brain. Examples include Perplexity (for searching) and Cursor (for coding).

Some tools even have their own models, which can make things a little more complex. In the coming blog, we will discuss the AI models.

AI: It's Not as New as You Think

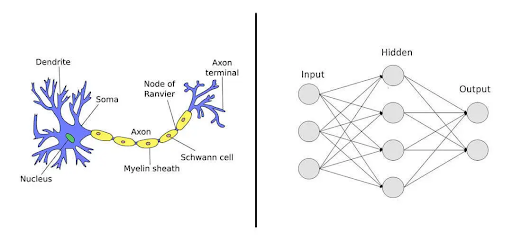

The idea of AI has been around for a while! AI is a technology to simulate how brain works, so as to build up intelligence. The first paper on AI, way back in 1943, explored how our brains work. People wondered, "If we understand how we think, can we build computers that think like us?"

Important work continued in the 1950s, including Alan Turing's ideas about "thinking" machines. So, AI is actually over 70 years old!

Why Now? Thank Gamers!

Why is AI suddenly so popular now? We owe a lot to gamers! Nvidia, a company that makes GPUs (Graphics Processing Units), originally focused on making video games look better.

How Do GPUs (Nvidia) and AI Work Together?

Computer graphics need lots of calculations. Imagine doing them all yourself – it would take forever! CPUs (Central Processing Units) are like small teams of workers. GPUs, thanks to Nvidia, are like huge teams, sometimes with thousands of workers!

How AI basically work? Why AI algorithm come?

AI models were tried to build by engineer with something like our brains, simulating how we think. Let's look at it from a programming perspective:

AI models were tried to build by engineer with something like our brains, simulating how we think. Let's look at it from a programming perspective:

Input -> Logic -> Output

This is similar to how we act:

Percept (see/hear) -> Process (think) -> Action (do)

Usually, programmers focus on the logic. When input comes in, the logic creates the output.

With AI models, we don't figure out the logic ourselves. Instead, we use "training." This lets the logic (the "brain") build itself, and then we can use it for different things.

Before AI, it was hard to define the logic or algorithm to get a specific output. For example, it's hard to list all the rules for telling different kinds of balls apart. So, engineers came up with "training." This avoids the hard work of figuring out the logic. We just tell the AI, "This photo has a basketball," "This photo has a football," and so on. Then, we ask it, "Where's the ball?" and it can find it!

The logic depends on the number of parameters (neurons). Each parameter involves some calculation. With many calculations, we can build a "brain."

How is AI trained?

There are three main ways to train AI, and they're similar to how we raise a baby:

-

Supervised Learning: Imagine teaching a baby the names of objects. You point to a basketball and say, "This is a basketball." Then you point to a football and say, "This is a football." You show the baby more examples and then ask, "Is this a basketball or a football?" If the baby gets it wrong, you give more examples until they learn. That's supervised learning: you provide labeled examples, and the AI learns from them.

-

Unsupervised Learning: Now, imagine giving a baby a bunch of LEGO blocks of different shapes. You ask them to group the blocks based on what's similar. The baby will start observing the shapes and might figure out that some are cones and some are cubes. Later, if you give the baby a new LEGO block, they might be able to put it in the correct group. That's unsupervised learning: the AI finds patterns in data without labeled examples.

-

Reinforcement Learning: Think about a baby learning to walk. They fall down a lot, but eventually, they learn how to walk through trial and error. That's reinforcement learning: the AI learns by trying different actions and getting feedback (like "good job!" or "try again"). It learns what works best through repeated attempts.

Usually, programmers focus on the logic. When input comes in, the logic creates the output.

With AI models, we don't figure out the logic ourselves. Instead, we use "training." This lets the logic (the "brain") build itself, and then we can use it for different things.

Before AI, it was hard to define the logic or algorithm to get a specific output. For example, it's hard to list all the rules for telling different kinds of balls apart. So, engineers came up with "training." This avoids the hard work of figuring out the logic. We just tell the AI, "This photo has a basketball," "This photo has a football," and so on. Then, we ask it, "Where's the ball?" and it can find it!

The logic depends on the number of parameters (neurons). Each parameter involves some calculation. With many calculations, we can build a "brain."

How is AI trained?

There are three main ways to train AI, and they're similar to how we raise a baby:

-

Supervised Learning: Imagine teaching a baby the names of objects. You point to a basketball and say, "This is a basketball." Then you point to a football and say, "This is a football." You show the baby more examples and then ask, "Is this a basketball or a football?" If the baby gets it wrong, you give more examples until they learn. That's supervised learning: you provide labeled examples, and the AI learns from them.

-

Unsupervised Learning: Now, imagine giving a baby a bunch of LEGO blocks of different shapes. You ask them to group the blocks based on what's similar. The baby will start observing the shapes and might figure out that some are cones and some are cubes. Later, if you give the baby a new LEGO block, they might be able to put it in the correct group. That's unsupervised learning: the AI finds patterns in data without labeled examples.

-

Reinforcement Learning: Think about a baby learning to walk. They fall down a lot, but eventually, they learn how to walk through trial and error. That's reinforcement learning: the AI learns by trying different actions and getting feedback (like "good job!" or "try again"). It learns what works best through repeated attempts.

AI Model: We Use Them, But Do We Understand Them?

AI is here because of GPUs! But even though we use AI Model simulate neural networks, we don't fully understand how they work. It's like knowing that the brain works, but not exactly how and why.

AI models are complicated. Imagine a notebook where every page has a problem, but you forget the solution as soon as you turn the page. You can solve the problem on the current page, but you can't remember past solutions.

Early attempts to fix this "memory" problem included "Long Short-Term Memory (LSTM)." Then, in 2017, Google's "Attention Is All You Need" introduced the transformer – the "T" in ChatGPT!

The Catch: Larger Brain Isn't Always Better

People used to think that more calculations (more neurons, or brain cells) always meant better AI. But Deepseek changed that! They showed that you can get great results with fewer calculations, which is a big deal for how we build AI. We may discuss Deepseek later.

Can We Understand AI?

Do we really understand how AI models work? Probably not completely. That's why it can be hard to know why an AI gives a certain answer, like we do not understand peers.

And of course, engineers start having AI because they are lazy for thinking of rules and algorithms. It certainly hard to understand!

Is AI "Lying"? Which Models Are Best?

Is AI making stuff up? Why does it say what it says? Which AI model is the best? These are tough questions!

ChatGPT is a "Generative Pre-trained Transformer."

Just like some people are more artistic and others are more logical, different AI models have different strengths. (At least in this stage)

What's Next for AI?

Will AI keep changing? Definitely! Will it follow the same path? Maybe not. There might be even better ways to build AI. Maybe databases will be the potential next big thing. The future of AI is exciting and full of possibilities!

Comments

Post a Comment